#Histogram dim3 grid serial

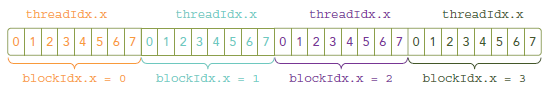

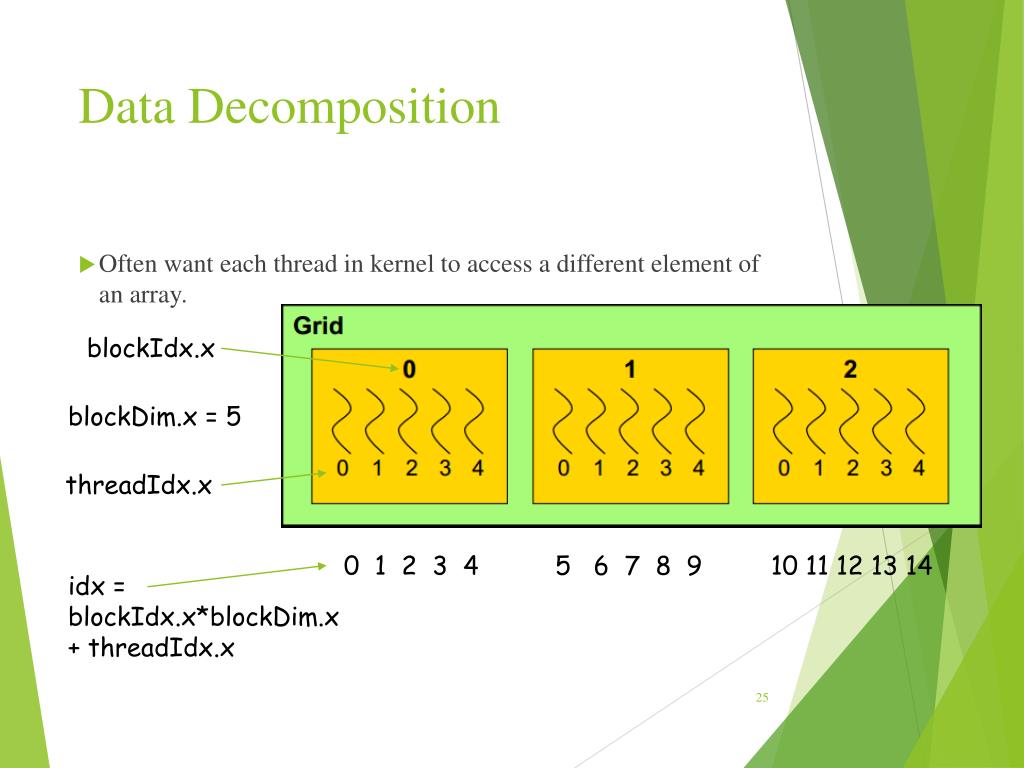

Serial solution for (i=0 i>(arguments) dim3 blocksPerGrid(1,1,1) //use only one block dim3 threadsPerBlock(N,1,1) //use N threads in the block myKernel>(result).Parallel Solution “Everybody check your room number. Asks each student their assigned room number.Receptionist performs the following tasks.Each student can be moved from room i to room 2i so that no-one has a neighbour.Unfortunately admission rates are down!.Students arrive at halls of residence to check in.(3,2,1) Idx values use zero indices, Dim values are a size The location of a block within a grid.The location of a thread within a block.Values are accessed as members int x = my_xyz.x.dim3 contains a collection of three integers (X, Y, Z) dim3 my_xyz(x_value, y_value, z_value).

#Histogram dim3 grid portable

Code is portable across different GPU versions.More blocks than SMs and more threads than cores.Oversubscribe and allow the hardware to perform scheduling.Don’t need to know the hardware characteristics.Hardware abstracted as a Grid of Thread Blocks.

The number of SMs and cores per SM varies.Each Streaming Multiprocessor has multiple cores.Hardware Model SM GPU SM SM SM SM Shared Memory Device Memory Multiple Streaming Multiprocessor Cores can operate on multiple elements in parallel.A thread is the execution of a kernel on one data element.A single computational function (kernel) operates on each element.Data set decomposed into a stream of elements.memory, synchronisation, etc.)ĭRAM GDRAM CPU NVIDIA GPU PCIe BUS Main Program Code _ _ _ GPU Kernel Code _ _ _ GPU Kernel Code _ _ _ GPU Kernel Code _ _ _ API functions provide management (e.g.Kernels execute on multiple threads concurrently.Language extensions for compute “kernels”.CUDA allows NVIDIA GPUs to be programmed in C/C++ (also in Fortran).Traditional sequential languages are not suitable for GPUs.Overview Motivation Introduction to CUDA Kernels CUDA Memory Management An Introduction to Programming with CUDAPaul Richmond

0 kommentar(er)

0 kommentar(er)